Synsynth – The Synaesthesia Synthesizer (or ‘the roomwide symphony’)

- 10:35 pm

- May 18, 2020

- No Comments

- construction of my room, providence - making chords using objects in my room 00:00

While creating sound pieces from earlier in the semester, I often found myself trying to just capture the sounds within spaces or created by objects that I am using to make the sounds. As we finish the course, I find myself unsatisfied by this, and wanted to take this to the next level. I wanted to create a piece that focuses less on the sounds the object *can* make, but more on the object’s presence in the space, and the space as an atmosphere. I took inspiration from a reading in the semester to think of the space as a network of all that is within it, everything that is put in the space interacts with each other and affects the inhabitants of the space. I wanted to take this concept, and use that as the basis of my project, rather then just the sounds created from the space. This is mainly because I started to feel like the sounds an object can create aren’t really representative of the object; I can shake a water bottle in my room and it will make a similar sound to if someone across the world shook a water bottle in their room, but these objects are both used very differently, one might be a one-use disposable bottle, while the other might have been used for years. I decided I wanted to make sure each object had its own individuality in the sound. It made me fantasize about an understanding of some sort of system that we could use that would be able to construct an object in our mind based upon a sound that almost worked like a barcode, as well as how that object interacts with the space around it. Looking at this back to the idea of these connections, I started thinking about the space as an orchestra, played using the objects’ existence in the space, not just using them to make sound.

I decided to take this project in two parts, the things I wanted to focus on were the physical presence of an object within a space, and the way we interact with them and how they affect us. Using these I would figure out a way to create a sonic representation of the object. I then wanted to be able to play these sounds together, creating a chord or harmony using these objects. It was to be versatile as well, a tool I could use that wasn’t limited to this project. I wanted to make something that could extend beyond this use.

I decided therefore to make a synthesizer. Not out of physical parts, but within a digital space, using programming. Synthesizers have so many variables that could be tracked and changed numerically, so I could apply formulas and algorithms to the process, so it wouldn’t have to be manually set. I started with the idea that this program would take in a picture of the object, and a short description. i wanted these pictures to be done without much effort, only using lighting that was already there, and taken in the space they usually are. I wanted the description to be used to tell the viewer’s relationship to the object.

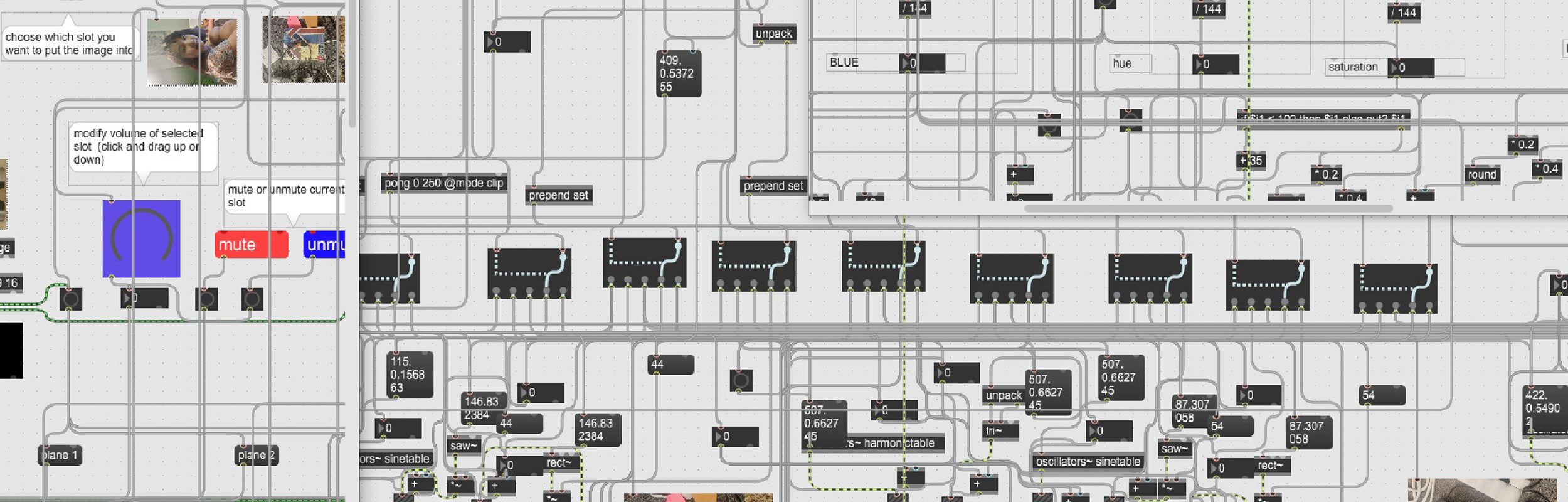

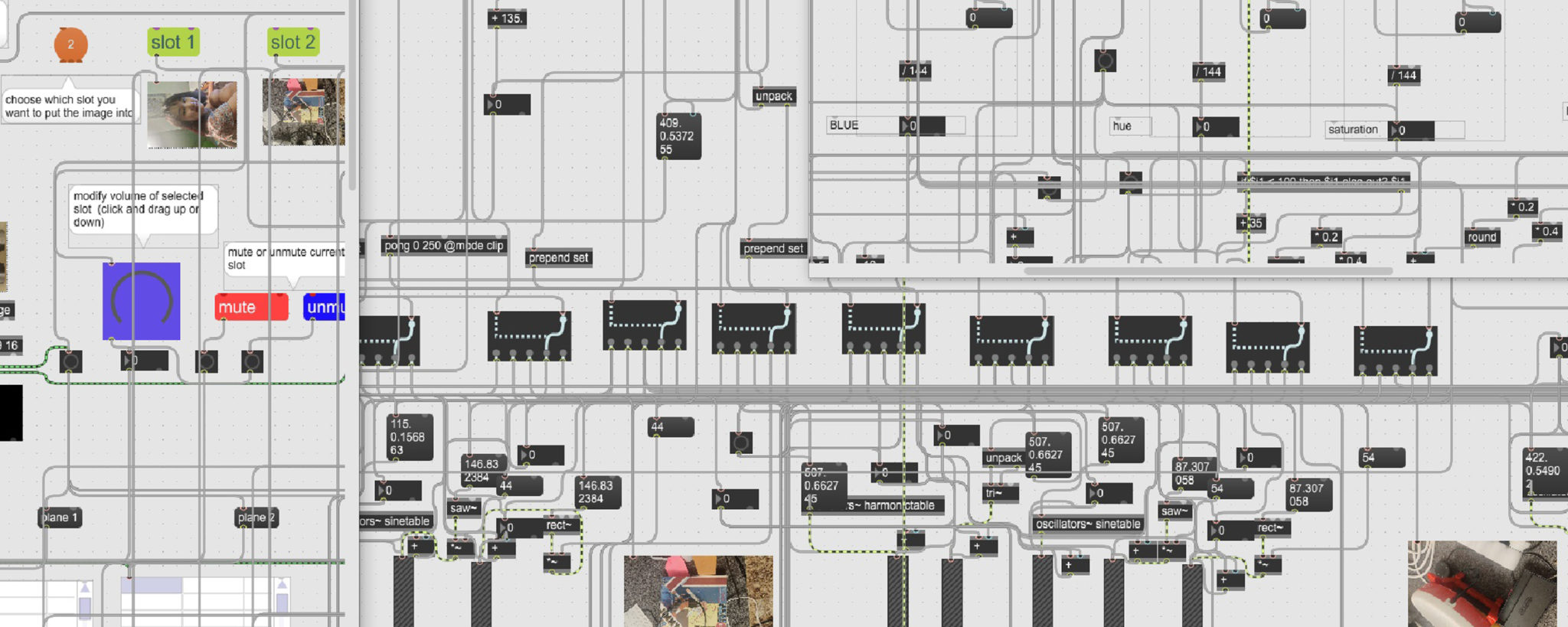

I decided to use max cycling ’76, a visual programming software that allows you to program as if you were wiring modules together, and is often used in audio/visual art and engineering because of it’s simple interface, wide variety of plugins, and versatility in input and output. I ended up not even having to use any external plugins or patches by the end of the project.

A simple description of how the program works is this : The picture is broken down into the average values of each pixel, their RGB and HSK values, and uses these to control 5 different waveforms. The short description affects the gain of each one of these waveforms, if the description is negative, the sound will be more artificial and jagged, but if it is positive, the sound will be smoother and more natural.

[what follows is a technical explanation of how it works, I left the decision making out of this part since I don’t want people to necessarily feel obligated to read this part. I added a line that will show when the technical description has ended so you can skip forward to that]

I started by figuring out how to read an image. The program asks you for the name of an image, then reads it from the file. I downsize the image to a 9 by 16 pixel image, using the jit suite of commands to turn it into a jit image. This makes it an image based off a matrix (like an excel sheet kind of). I then made the program split these matrixes into two, one that has the RGB definitions of each of the pixels, and one that has the HSK (hue, saturation, lightness) of each of the pixels. I split these further to get a matrix of each of these values.

After this has been input, I then ask for the input of the description of the code. I’ll get back to this later so it’s easier to understand. I made a counter program that then selects each cell in a matrix one by one, which I use to go through and output the contents of each cell within the matrixes containing the values in the image. I use these to create an average for each attribute. I now have the average values of Red, Green, Blue, Hue, Saturation, and Lightness. The next part of the step is for these to be converted into frequencies.

There are 5 different waveforms/wavetables that I will be using. What this means is the different shapes the sound wave will take on in the synthesizer. I split these into two, artificial/jagged ones and smooth/natural ones. The artificial ones I used were a sawtooth and rectangle waveform, and the natural ones were a triangle, sine, and harmonic waveform. For the artificial ones, I used hue as the pitch, and saturation as the amplitude of the wave. For the smooth waveforms, I did (R+G+B)/3 to get the average RGB value, and used that as the pitch, and the lightness as the amplitude. I have these go through their oscillators, and presto you have a sound. For some of these, such as the lightness, I use some mathematical formulas or logic to change the value, as sometimes things like saturation will be so average it won’t create much variation between pictures.

This wasn’t enough however, as I wanted there to be more control. Each value (RGBHS) excluding K, then controls the gain of each oscillator. R -> harmonic, G -> sine, B -> triangle, H-> sawtooth, S-> rectangle. This isn’t all that affects the final sound. I created a selection of buttons that are labelled with different possible descriptors of the object, such as ‘is used to eat/drink’, ‘is broken’, ‘reminds me of stuff I don’t want to do’. I separated these objects into negative and positive but made sure to mix them up in the actual visual presentation. These buttons are linked to a counter, that counts how many positive or negative ones are pressed. Those numbers are then processed, and the positive value is added to the gain of all the natural sounding synths, and around half that value is subtracted from the jagged synths. The negative value is added to the gain of the jagged synths, and a smaller version of that value is subtracted from the positive synths. I found that I had to make the positive value smaller, as the positive synths get too loud very very easily.

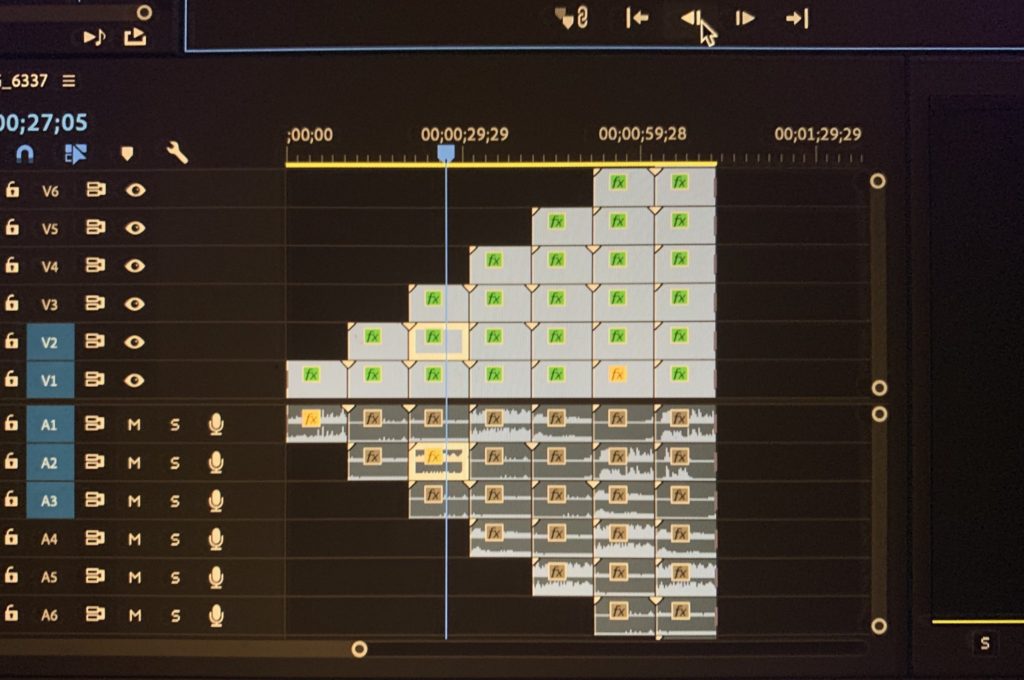

I then made a system that allows you to choose a ‘slot’ to put the image and description into, allowing 5 slots in total that can be swapped with the press of a button. I then made a controller that allows you to apply a modifier to the general volume of the sound in a slot, or mute or unmute a slot, allowing you to play and manipulate objects together. I also created a small system that records the sound output as a wav file.

[TECHNICAL DESCRIPTION END]

There were a couple important decisions I had to make during the making of this process. I decided to use RGB HSK as my variables since I felt like if I tried to use more, it would be hard to make each characteristic have a valuable impact on the sound, and I didn’t want to have a bunch of things that made no difference in the result. I also had to decide what each characteristic would do. When thinking about how the hue and saturation would control the pitch and amplitude, I chose the hue to be the pitch. I felt that choosing something like lightness or saturation for the pitch were a bit obvious, but also, those two values are more of a scale, a saturation or lightness 0 will be darker than 255, but hue just dictates colour. This was complemented by the fact I felt like saturation made sense as amplitude, one could almost say that it is equivalent to the ‘loudness’ of the visual aspect of an image.

I made each value control the gain of one of the synth’s oscillators as that would mean more variance in the sounds of each image, and while there was some mathematical processing to do, it made sense to me, as I had only used RGB put together on the pitch, and wanted each of those to individually affect the sound as well.

I made the buttons effect the gain as well. The description tags are classified into negative and positive, each effecting different parts of the synthesizer. the sound gets more jagged if the object is described more negatively, and smoother if positive. Because the subtracted values are reduced, it also means that the more descriptions are added, the louder the sound will be. I did this because it felt like if there are more emotions attached to the object through descriptions, it would make the sound louder.

I figured 5 different ‘slots’ that could be chosen to put inputs into made sense, as 5 of the sounds running at default volume is already pretty chaotic and loud, so I didn’t want to make it more then 5. Because some sounds would just entirely overpower others, I added a slider that could affect each slot’s volume individually, allowing for a more curated experience.

I then gathered material to use on the synthesizer. I used objects from my own room here in providence, but also asked my parents to do it for my room back in London, as well as asking friends from around the globe to help give me images and descriptions from their own living spaces. Using these I could create orchestras from all these people’s rooms, and I even tried to combine some of these together, creating mashups of me and my friend’s living spaces.

There is a wall between me as the creator of the sound and the sound itself. While I made the program that transforms physical and atmosphere into sound, I do not understand the transformation that it goes through. If I did, I feel that would be too much control. I cannot see the atoms that make up the structure of each object, so I feel like this disconnection to the transformation is similar to that. I cannot fully understand the makeup of the sound, which makes it more like the objects around me.

A small thing I noticed as well is how similar the sound of multiple objects playing at once sounds to traffic or the city. I found this funny, especially in the current context of self-isolation, that I can make a cityscape out of the objects in my room.

I really enjoyed being able to do something like building a synthesizer, something I never thought I’d do, as well as learning how to use Max Cylcing ’74, something I definitely want to continue learning how to use in the future

- CJ's room, singapore 00:00

- Dexter's room, london 00:00

- Elizabeth's room, singapore 00:00

- leah's room, texas 00:00

- Mehek's room, dubai 00:00

- nicolas' room, providence 00:00

- Ivery's room, providence 00:00

- 3 room mashups (not 3 rooms, 3 mashups) 00:00

I’m really proud of this tool I have created, I feel it was a complete success. The droning noises are not very understandable, but I feel that is the correct outcome. This is a new way of representing objects that encompasses the way we interact with them, so something that feels weird and indecipherable makes sense to me. The sounds themselves are almost enchanting as well with their weird characteristics, knowing that the objects that sit next to me are what made them makes them all the more fascinating. I find myself trying to see if I can try to pick apart the sounds to see what parts of the object create what part of the sound.

I’m extremely happy and proud of my creation. I hope to use this base as a tool for future investigations and experiments, perhaps I can modify it to take different visual inputs or a live visual input? I feel very satisfied getting this far in the project. I don’t want to say completing it, as I know that I will be bringing it with me into the future.

Responses